Results

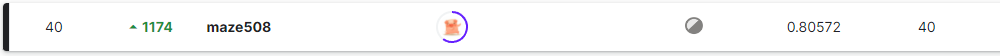

Rank : 40 / 2302 (Top 2%)

Overview

Having already hosted 3 previous competitions, Jigsaw’s 4th competition is like none before, both in terms of data provided and final private leaderboard shakeup, with most of the top 50 finishers moving up 1000+ positions from public leaderboard scores.

A brief overview of the competition can be found here.

Very much unlike most of its previous Natural Language Processing (NLP) competitions, this competition does not provide training data and only provides a validation set, hoping to encourage participants to share and use open source data such as previous iterations of Jigsaw competitions as train sets. This paints a more open ended problem as compared to most other competitions. Funny enough, I was thrown a very similar style of open ended problem at work quite a few months back with little training data and no examples of test set data.

This open ended nature to the problem allows for more space and creativity to explore but in turn, makes it very difficult to track your experiment scores and progress due to larger unknown factors. This arises as no one can be certain of the data distribution of the private leaderboards and with such large disparities in the Public Leaderboard scores and Cross Validation (CV) scores, it was difficult (at least for me) to initially judge just what was working and what was not.

Data and Cross Validation (CV)

Data

The Data I chose to use for the competition can be found below :

Cross Validation (CV)

Since the LB and CV scores are essentially inversely related (from my experiments), I decided on measuring performance of my experiment with a mix of CV and LB score 0.25 LB + 0.75 CV. UnionFind and StratifiedKFolds were used for training

Model

Transformers

I started the competition early but took a long break and came back 2 weeks before the competition ended so I did not have much time to explore model variety. (And I only trained models on Kaggle GPU) Hence I only trained the common transformer variants.

Throughout the competition I explored Binary Cross Entropy (BCE) and Margin Ranking Loss (MRL), but in my case MRL always performed better than BCE.

The Transformer Model Performances can be found below :

| No. | Model | Dataset | CV | Loss Fn |

|---|---|---|---|---|

| 1 | Roberta Base | Toxic + Multilingual + Ruddit + Malignant | 0.69569 | MRL |

| 2 | Roberta Base | Toxic + Multilingual + Ruddit + Malignant | 0.69465 | BCE |

| 3 | Roberta Large | Toxic + Multilingual + Ruddit + Malignant | 0.70021 | MRL |

| 4 | Roberta Large | Toxic + Multilingual + Ruddit + Malignant | 0.69615 | BCE |

| 5 | Deberta Base | Toxic + Multilingual + Ruddit + Malignant | 0.70130 | MRL |

| 6 | Deberta Base | Toxic + Multilingual + Ruddit + Malignant | 0.70101 | BCE |

| 7 | Deberta Base | Toxic + Multilingual + Malignant | 0.70115 | MRL |

| 8 | Deberta Base | Toxic + Multilingual + Malignant | 0.69867 | BCE |

Linear Models

Contrary to the general direction of discussions, I felt like linear models were important (to an extent). Linear Models performed well on LB but badly on CV while Transformers performed badly on LB but well on CV. Although it makes sense to trust your CV, I believe that it may not completely reliable in this case.

Although LB is only 5% of the final Private LB and we cant judge for the rest of the 95% of data, it is not useless as well, it gives an idea of generally what type of data / data distribution is like for our final private leaderboard. This is especially important as for this competition your distribution of train set is likely to be very different from the distribution of the test sets you will be evaluated on (since you have to choose the training data yourself), and you are unsure if the validation data given has a similar distribution to the leaderboard data. Hence, the LB and CV scores are severly limited in this aspect. On the final day of the competition, I convinced myself to ensemble 1 Linear TFIDF model to my RoBERTa ensemble and selected it as my 2nd submission even though it did not have the best CV / LB score. I reasoned that Public LB has its importance and I could not totally trust my CV scores.

The additional Linear Model gave me a small boost in score in the Final Private Leaderboard and if not for the last minute discussion, I would have slipped 10+ places on the Final Leaderboard.

| No. | Model | Dataset | CV |

|---|---|---|---|

| 9 | TF-IDF Ridge Regression | Toxic + Multilingual + Ruddit + Malignant | 0.67844 |

| 10 | TF-IDF Ridge Regression | Toxic + Multilingual + Malignant | 0.65105 |

The Linear Model is Heavily Inspired by @readoc’s implementation here. I personally only did some hyperparameter tuning and data engineering changes

Final Submission Consists of Model No : 1, 3, 5, 9 (Equal Weight)

Fine Tuning

I spent most of my time tuning the Learning Rates and Decay Rates of my RoBERTa models. In hindsight, I could have spent more time on dataset preparation and playing around with Custom Loss Functions instead. Ultimately I think hyperparameter tuning was not very effective in increasing my CV and LB scores but nevertheless did boost it by a small amount.

What didnt work

- Masked Language Models (MLM)

- Boosted Trees (Funny enough it was the first thing I tried but couldnt make it work. Other competitors have found success with this and can be found here

Only the Transformer Inference Code and Model Checkpoints Datasets is shared. The Evaluation Repository is found here

Model Checkpoint Locations :